A robot’s got to know its limitations. But that doesn’t mean it has to accept them. This one in particular uses tools to expand its capabilities, commandeering nearby items to construct ramps and bridges. It’s satisfying to watch but, of course, also a little worrying.

This research, from Cornell and the University of Pennsylvania, is essentially about making a robot take stock of its surroundings and recognize something it can use to accomplish a task that it knows it can’t do on its own. It’s actually more like a team of robots, since the parts can detach from one another and accomplish things on their own. But you didn’t come here to debate the multiplicity or unity of modular robotic systems! That’s for the folks at the IEEE International Conference on Robotics and Automation, where this paper was presented (and Spectrum got the first look).

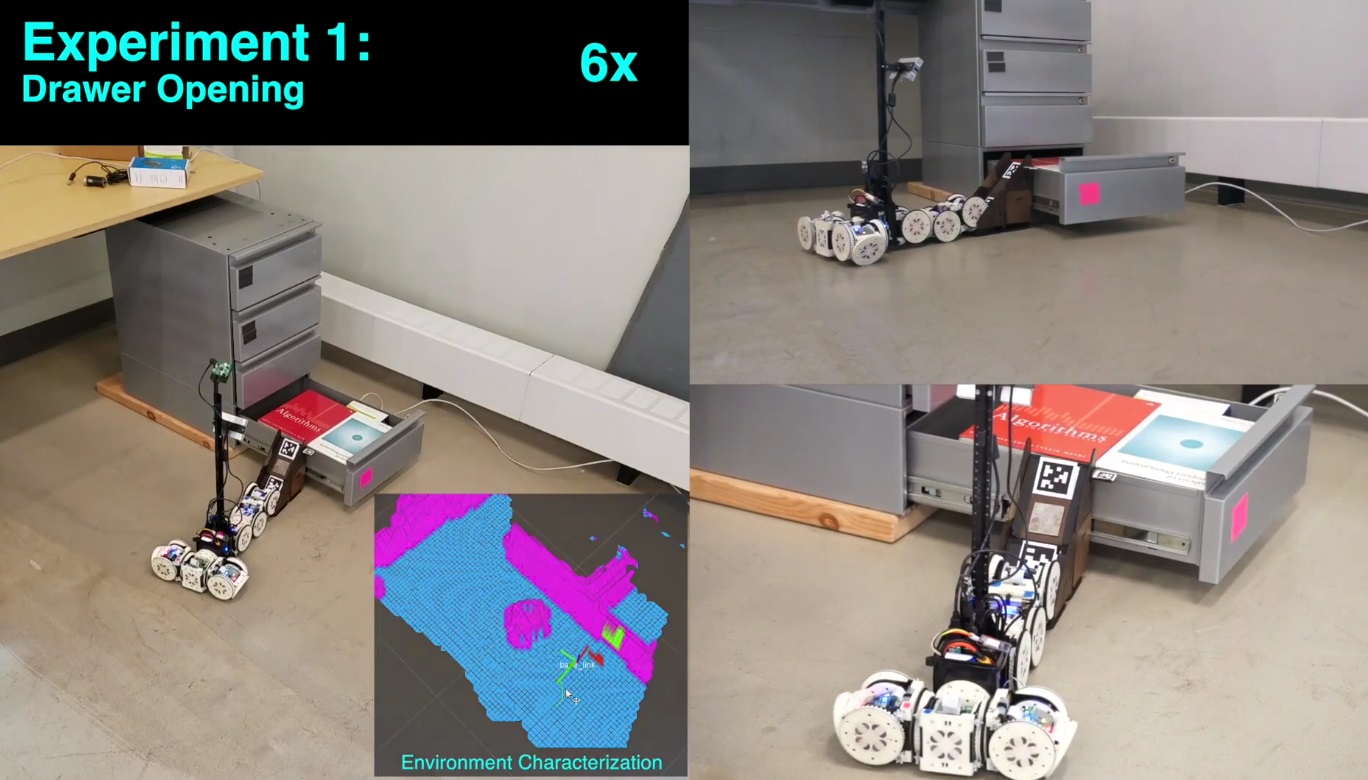

SMORES-EP is the robot in play here, and the researchers have given it a specific breadth of knowledge. It knows how to navigate its environment, but also how to inspect it with its little mast-cam and from that inspection derive meaningful data like whether an object can be rolled over, or a gap can be crossed.

It also knows how to interact with certain objects, and what they do; for instance, it can use its built-in magnets to pull open a drawer, and it knows that a ramp can be used to roll up to an object of a given height or lower.

It also knows how to interact with certain objects, and what they do; for instance, it can use its built-in magnets to pull open a drawer, and it knows that a ramp can be used to roll up to an object of a given height or lower.

A high-level planning system directs the robots/robot-parts based on knowledge that isn’t critical for any single part to know. For example, given the instruction to find out what’s in a drawer, the planner understands that to accomplish that, the drawer needs to be open; for it to be open, a magnet-bot will have to attach to it from this or that angle, and so on. And if something else is necessary, for example a ramp, it will direct that to be placed as well.

The experiment shown in this video has the robot system demonstrating how this could work in a situation where the robot must accomplish a high-level task using this limited but surprisingly complex body of knowledge.

In the video, the robot is told to check the drawers for certain objects. In the first drawer, the target objects aren’t present, so it must inspect the next one up. But it’s too high — so it needs to get on top of the first drawer, which luckily for the robot is full of books and constitutes a ledge. The planner sees that a ramp block is nearby and orders it to be put in place, and then part of the robot detaches to climb up and open the drawer, while the other part maneuvers into place to check the contents. Target found!

In the next task, it must cross a gap between two desks. Fortunately, someone left the parts of a bridge just lying around. The robot puts the bridge together, places it in position after checking the scene, and sends its forward half rolling towards the goal.

These cases may seem rather staged, but this isn’t about the robot itself and its ability to tell what would make a good bridge. That comes later. The idea is to create systems that logically approach real-world situations based on real-world data and solve them using real-world objects. Being able to construct a bridge from scratch is nice, but unless you know what a bridge is for, when and how it should be applied, where it should be carried and how to get over it, and so on, it’s just a part in search of a whole.

Likewise, many a robot with a perfectly good drawer-pulling hand will have no idea that you need to open a drawer before you can tell what’s in it, or that maybe you should check other drawers if the first doesn’t have what you’re looking for!

Such basic problem-solving is something we take for granted, but nothing can be taken for granted when it comes to robot brains. Even in the experiment described above, the robot failed multiple times for multiple reasons while attempting to accomplish its goals. That’s okay — we all have a little room to improve.

from TechCrunch https://ift.tt/2xtg3jX

No comments:

Post a Comment