Adobe XD, the company’s platform for designing and prototyping user interfaces and experiences, is adding support for a different kind of application to its lineup: voice apps. Those could be applications that are purely voice-based — maybe for Alexa or Google Home — or mobile apps that also take voice input.

The voice experience is powered by Sayspring, which Adobe acquired earlier this year. As Sayspring’s founder and former CEO Mark Webster told me, the team has been working on integrating these features into XD since he joined the company.

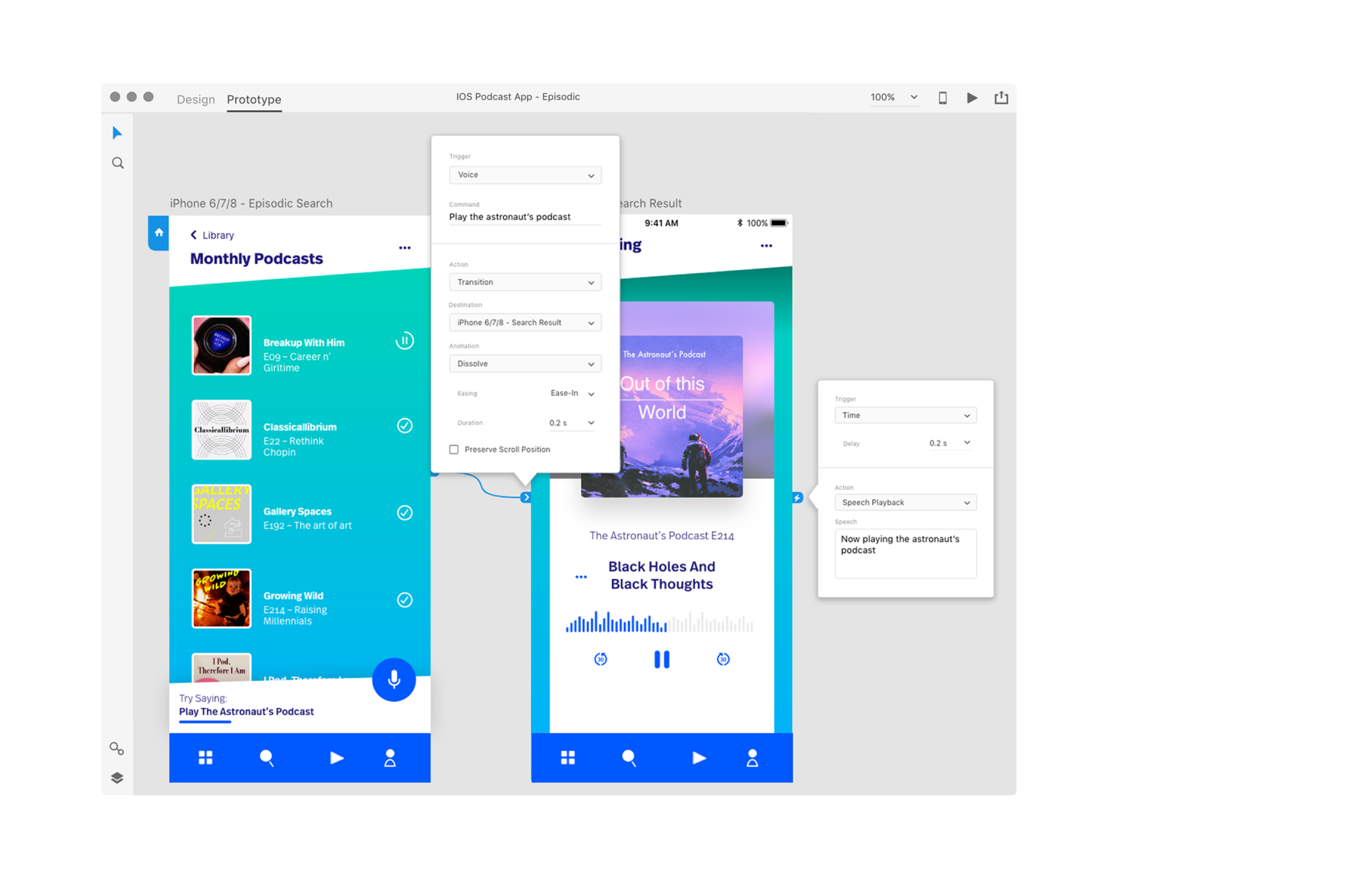

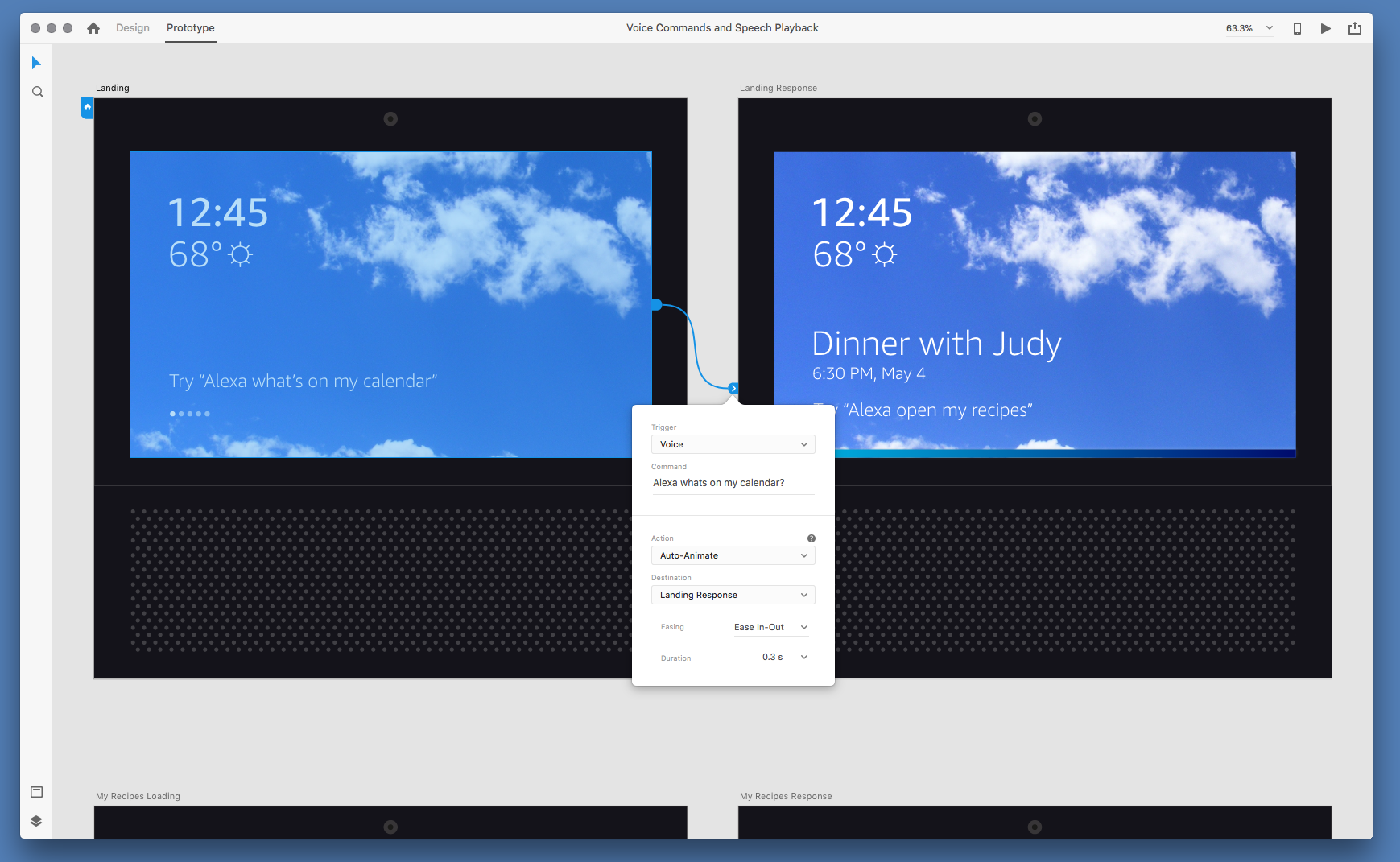

To support designers who are building these apps, XD now includes voice triggers and speech playback. That user experience is tightly integrated with the rest of XD and in a demo I saw ahead of today’s reveal, building voice apps didn’t look all that different from prototyping any other kind of app in XD.

To make the user experience realistic, XD can now trigger speech playback when it hears a specific word or phrase. This isn’t a fully featured natural language understanding system, of course, since the idea here is only to mock-up what the user experience would look like.

“Voice is weird,” Webster told me. “It’s both a platform like Amazon Alexa and the Google Assistant, but also a form of interaction […] Our starting point has been to treat it as a form of interaction — and how do we give designers access to the medium of voice and speech in order to create all kinds of experiences. A huge use case for that would be designing for platforms like Amazon Alexa, Google Assistant and Microsoft Cortana.”

And these days, with the advent of smart displays from Google and its partners, as well as the Amazon Echo Show, these platforms are also becoming increasingly visual. As Webster noted, the combination of screen design and voice is being more and more important now and so adding voice technology into XD seemed like a no-brainer.

Adobe’s product management lead for XD Andrew Shorten stressed that before acquiring Sayspring and integrating it into XD, its users had a hard time building voice experiences. “We started to have interactions with customers who were beginning to experiment with creating experiences for voice,” he said. “And then they were describing the pain and the frustration — and all the tools that they’d use to be able to prototype didn’t help them in this regard. And so they had to pull back to working with developers and bringing people in to help with making prototypes.”

XD is getting a few other new features, too. It now features a full range of plugins, for example, that are meant to automate some tasks and integrate it with third-party tools.

Also new is auto-animate, which brings relatively complex animation to XD that appear when you are transitioning between screens in your prototype app. The interesting part here, of course, is that this is automated. To see it in action, all you have to do is duplicate an existing artboard, modify some of the elements on the pages and tell XD to handle the animations for you.

The release also features a number of other new tools. Drag Gestures now allows you to re-create the standard drag gestures in mobile apps, maybe for building an image carousel, for example, while linked symbols make it easier to apply changes across artboards. There is also now a deeper integration with Adobe Illustrator and you can export XD designs to After Effects, Adobe’s animation tool for those cases where you need full control over animations inside your applications.

from TechCrunch https://ift.tt/2P4cbgG

No comments:

Post a Comment