Disney is unequivocally the world’s leader in 3D simulations of hair — something of a niche talent in a way, but useful if you make movies like Tangled, where hair is basically the main character. A new bit of research from the company makes it easier for animators to have hair follow their artistic intent while also moving realistically.

The problem Disney Research aimed to solve was a compromise that animators have had to make when making the hair on characters do what the scene requires. While the hair will ultimately be rendered in glorious high-definition and with detailed physics, it’s too computationally expensive to do that while composing the scene.

Should a young warrior in her tent be wearing her hair up or down? Should it fly out when she turns her head quickly to draw attention to the movement, or stay weighed down so the audience isn’t distracted? Trying various combinations of these things can eat up hours of rendering time. So, like any smart artist, they rough it out first:

“Artists typically resort to lower-resolution simulations, where iterations are faster and manual edits possible,” reads the paper describing the new system. “But unfortunately, the parameter values determined in this way can only serve as an initial guess for the full-resolution simulation, which often behaves very different from its coarse counterpart when the same parameters are used.”

The solution proposed by the researchers is basically to use that “initial guess” to inform a high-resolution simulation of just a handful of hairs. These “guide” hairs act as feedback for the original simulation, bringing a much better idea of how the rest will act when fully rendered.

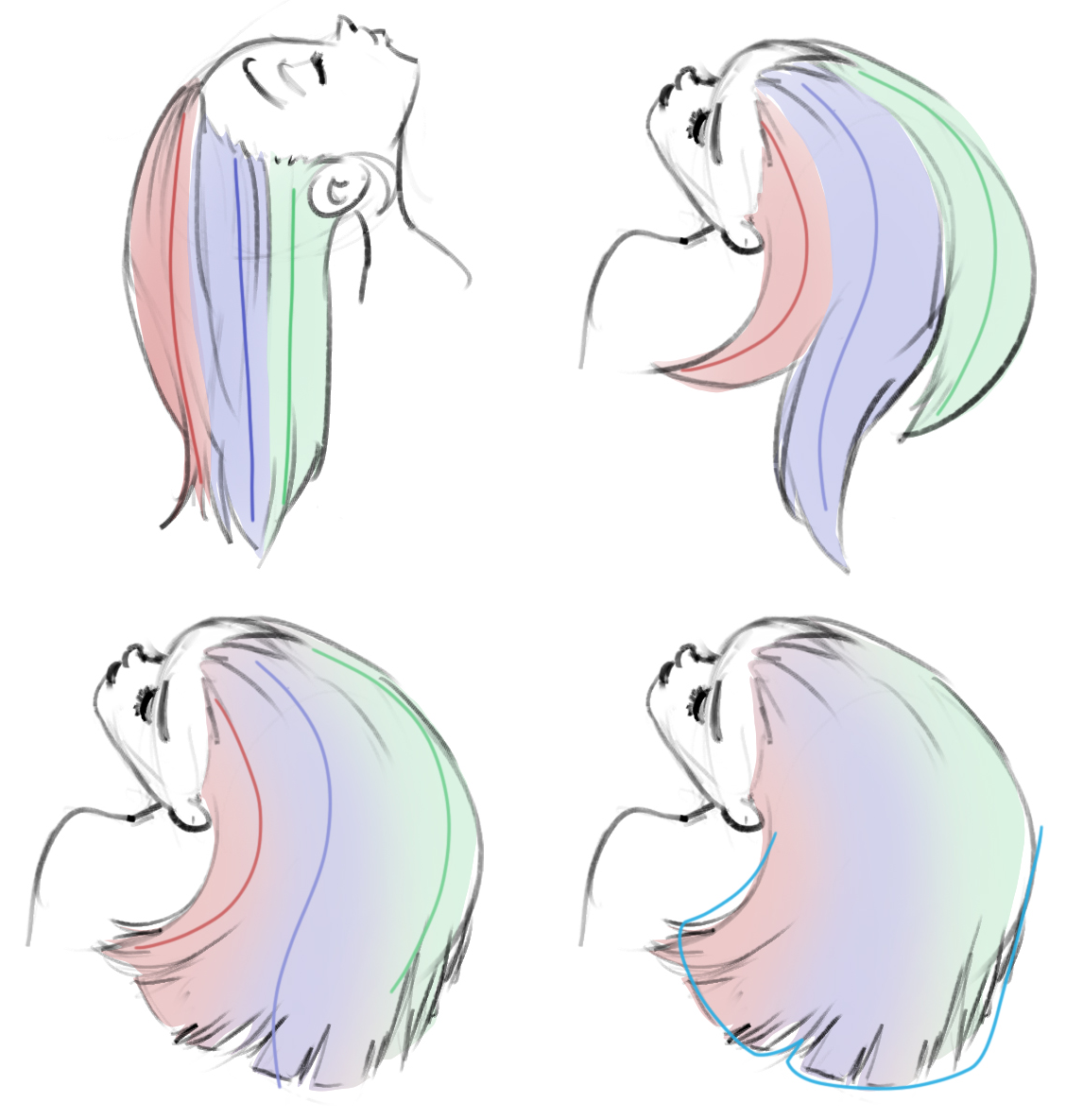

The guide hairs will cause hair to clump as in the upper right, while faded affinities or an outline-based guide (below, left and right) would allow for more natural motion if desired.

And because there are only a couple of them, their finer simulated characteristics can be tweaked and re-tweaked with minimal time. So an artist can fine-tune a flick of the ponytail or a puff of air on the bangs to create the desired effect, and not have to trust to chance that it’ll look like that in the final product.

This isn’t a trivial thing to engineer, of course, and much of the paper describes the schemes the team created to make sure that no weirdness occurs because of the interactions of the high-def and low-def hair systems.

It’s still very early: it isn’t meant to simulate more complex hair motions like twisting, and they want to add better ways of spreading out the affinity of the bulk hair with the special guide hairs (as seen at right). But no doubt there are animators out there who can’t wait to get their hands on this once it gets where it’s going.

from TechCrunch https://ift.tt/2uABL0S

No comments:

Post a Comment