The FCC has officially issued this year’s Broadband Deployment Report, summarizing the extent to which the agency and industry have closed the digital divide in this country. But not everyone agrees with it: “The rosy picture the report paints about the status of broadband deployment is fundamentally at odds with reality,” said Geoffrey Starks in a lengthy dissenting statement.

The yearly report, mandated by Congress, documents things like new broadband customers in rural areas, broadband expanding to new regions and all that sort of thing. The one issued today proclaims cheerfully:

[The FCC] has made closing the digital divide between Americans with, and without, access to modern broadband networks its top priority. As a result of those efforts, the digital divide has narrowed substantially, and more Americans than ever before have access to high-speed broadband.

We find, for a second consecutive year, that advanced telecommunications capability is being deployed on a reasonable and timely basis.

Naturally the FCC wants to highlight the progress made rather than linger on failures, but this year the latter are highly germane, as Starks points out, largely because one error in particular threw off the results by millions.

The statistics in the report are based on forms filled out by broadband providers, which seem to go unchecked even in the case of massive outliers. Barrier Free broadband reported having gone from zero subscribers in March of 2017 to, seven months later, serving the entirety of New York state’s 62 million residents with state of the art gigabit fiber connections. There are so many things wrong with this filing that the freshest intern at the Commission should have flagged it as suspect.

Instead, the data was accepted as gospel, and only a full year later did reporters at Free Press notice the discrepancy and call it to the FCC’s attention.

That this error, so enormous in scope, so obvious and so consequential (it skewed the national numbers by large amounts), was not detected, and once detected was only cursorily addressed, leaves Starks flabbergasted:

The fact that a 2019 Broadband Deployment Report with an error of over 62 million connections was circulated to the full Commission raises serious questions. Was the Chairman’s office aware of the errors when it circulated the draft report? If not, why didn’t an “outlier” detection function raise alarms with regard to Barrier Free? Also, once the report was corrected, the fact that such a large number of connections came out of the report’s underlying data without changing the report’s conclusion, and without resulting in a substantial charge to the report, calls into question the extent to which the report and its conclusions depend on and flow from data.

In other words, if the numbers can change that much and the conclusions stay the same, what exactly are the conclusions based on?

Starks is the latest Commissioner to be appointed and one of the two Democrats there, the other being Jessica Rosenworcel (the Commission maintains a 3:2 party balance in favor of the current administration). Both have been outspoken in their criticism of the way the Broadband Deployment Report is researched and issued.

It’s the same data used to create the FCC’s broadband map, which ostensibly shows which carriers and speeds are available in your area. But the issues with this are many and various.

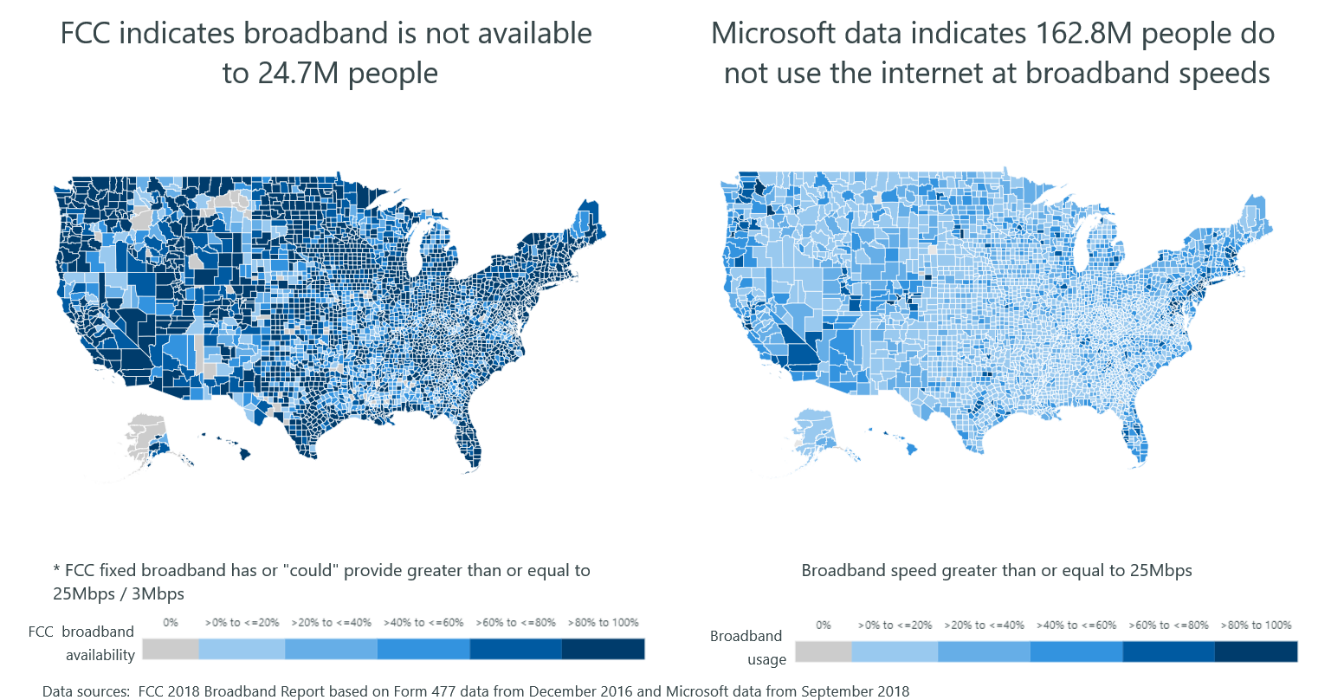

The data is only broken down by census tract, a unit that varies a great deal in size — some are tiny, some enormous. Yet if one company provides service to one person in that tract, it is considered “served” with that broadband capability throughout. The resulting map is so full of inaccuracies as to be useless, many argue — including Microsoft, which recently said it had observed “significant discrepancies across nearly all counties in all 50 states.”

The data is only broken down by census tract, a unit that varies a great deal in size — some are tiny, some enormous. Yet if one company provides service to one person in that tract, it is considered “served” with that broadband capability throughout. The resulting map is so full of inaccuracies as to be useless, many argue — including Microsoft, which recently said it had observed “significant discrepancies across nearly all counties in all 50 states.”

The good news is that the FCC is aware of this and currently working on ways to improve data gathering. In future years better rules or more location-specific reporting could make the maps and deployment report considerably better. But at present, Starks concludes, “I don’t believe that we know what the state of broadband deployment is in the U.S. with sufficient accuracy.”

Commissioner Rosenworcel was similarly unsparing in her dissent.

“This report deserves a failing grade,” she wrote. “Putting aside the embarrassing fumble of the FCC blindly accepting incorrect data for the original version of this report, there are serious problems with its basic methodology. Time and again this agency has acknowledged the grave limitations of the data we collect to assess broadband deployment.”

The data also do not address problems that are unlikely to be addressed in forms filled out by the industry, such as redlining, shady business practices and high prices for the broadband that is available.

“We will never manage problems we do not measure,” she continued. “Our ability to address the challenge of uneven internet access across the country is only made more challenging by our inability to be frank about the state of deployment today. Moreover, we need to be thoughtful about how impediments to adoption, like affordability, are an important part of the digital equity equation and our national broadband challenge.”

The Republican Commissioners, Brendan Carr and Michael O’Rielly, supported the report and did not mention the systematic data sourcing problems or indeed the enormous error that caused the draft of this report to be totally off base. O’Rielly did have an objection, however. He is “dismayed by the report’s reliance on purported ‘insufficient evidence’ as a basis for maintaining—for yet another year in a row—an outdated siloed approach to evaluating fixed and mobile broadband, rather than examining both markets as one.”

This has been suggested before and is a dangerously bad idea.

It should be said that the report isn’t one big single error. There’s more to it than just repackaging the aspirational numbers of the telecoms industry — though that’s a big part. It still holds interesting data that can be used in apples-to-apples comparisons to previous years. But more than ever it sounds like that data and any conclusions made from it — or for that matter rules or legislation — should be taken with a grain of salt.

from TechCrunch https://tcrn.ch/2Wzb2S4

No comments:

Post a Comment