Alex Jones’ Infowars is a fake news-peddler. But Facebook deleting its Page could ignite a fire that consumes the network. Still, some critics are asking why it hasn’t done so already.

This week Facebook held an event with journalists to discuss how it combats fake news. The company’s recently appointed head of News Feed John Hegeman explained that, “I guess just for being false, that doesn’t violate the community standards. I think part of the fundamental thing here is that we created Facebook to be a place where different people can have a voice.”

In response, CNN’s Oliver Darcy tweeted: “I asked them why InfoWars is still allowed on the platform. I didn’t get a good answer.” BuzzFeed’s Charlie Warzel meanwhile wrote that allowing the Infowars Page to exist shows that “Facebook simply isn’t willing to make the hard choices necessary to tackle fake news.”

Facebook’s own Twitter account tried to rebuke Darcy by tweeting, “We see Pages on both the left and the right pumping out what they consider opinion or analysis – but others call fake news. We believe banning these Pages would be contrary to the basic principles of free speech.” But harm can be minimized without full-on censorship.

There is no doubt that Facebook hides behind political neutrality. It fears driving away conservative users for both business and stated mission reasons. That strategy is exploited by those like Jones who know that no matter how extreme and damaging their actions, they’ll benefit from equivocation that implies ‘both sides are guilty,’ with no regard for degree.

Instead of being banned from Facebook, Infowars and sites like it that constantly and purposely share dangerous hoaxes and conspiracy theories should be heavily down-ranked in the News Feed.

Effectively, they should be quarantined, so that when they or their followers share their links, no one else sees them.

“We don’t have a policy that stipulates that everything posted on Facebook must be true — you can imagine how hard that would be to enforce,” a Facebook spokesperson told TechCrunch. “But there’s a very real tension here. We work hard to find the right balance between encouraging free expression and promoting a safe and authentic community, and we believe that down-ranking inauthentic content strikes that balance. In other words, we allow people to post it as a form of expression, but we’re not going to show it at the top of News Feed.”

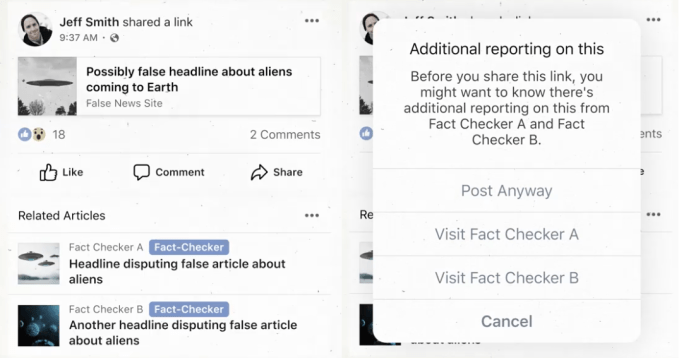

Facebook already reduces the future views of posts by roughly 80 percent when they’re established as false by its third-party fact checkers like Politifact and the Associated Press. For repeat offenders, I think that reduction in visibility should be closer to 100 percent of News Feed views. What Facebook does do to those whose posts are frequently labeled as false by its checkers is “remove their monetization and advertising privileges to cut off financial incentives, and dramatically reduce the distribution of all of their Page-level or domain-level content on Facebook.”

The company wouldn’t comment directly about whether Infowars has already been hit with that penalty, noting “We can’t disclose whether specific Pages or domains are receiving such a demotion (it becomes a privacy issue).” For any story fact checked as false, it shows related articles from legitimate publications to provide other perspectives on the topic, and notifies people who have shared it or are about to.

But that doesn’t solve for the initial surge of traffic. Unfortunately, Facebook’s limited array of fact checking partners are strapped with so much work, they can only get to so many BS stories quickly. That’s a strong endorsement for more funding to be dedicated to these organizations like Snopes, preferably by even keeled non-profits, though the risks of governments or Facebook chipping in might be worth it.

But that doesn’t solve for the initial surge of traffic. Unfortunately, Facebook’s limited array of fact checking partners are strapped with so much work, they can only get to so many BS stories quickly. That’s a strong endorsement for more funding to be dedicated to these organizations like Snopes, preferably by even keeled non-profits, though the risks of governments or Facebook chipping in might be worth it.

Given that fact-checking will likely never scale to be instantly responsive to all fake news in all languages, Facebook needs a more drastic option to curtail the spread of this democracy-harming content on its platform. That might mean a full loss of News Feed posting privileges for a certain period of time. That might mean that links re-shared by the supporters or agents of these pages get zero distribution in the feed.

But it shouldn’t mean their posts or Pages are deleted, or that their links can’t be opened unless they clearly violate Facebook’s core content policies.

Why downranking and quarantine? Because banning would only stoke conspiratorial curiosity about these inaccurate outlets. Trolls will use the bans as a badge of honor, saying, “Facebook deleted us because it knows what we say is true.”

They’ll claim they’ve been unfairly removed from the proxy for public discourse that exists because of the size of Facebook’s private platform.

What we’ll have on our hands is “but her emails!” 2.0

People who swallowed the propaganda of “her emails”, much of which was pushed by Alex Jones himself, assumed that Hillary Clinton’s deleted emails must have contained evidence of some unspeakable wrongdoing — something so bad it outweighed anything done by her opponent, even when the accusations against him had evidence and witnesses aplenty.

If Facebook deleted the Pages of Infowars and their ilk, it would be used as a rallying cry that Jones’ claims were actually clairvoyance. That he must have had even worse truths to tell about his enemies and so he had to be cut down. It would turn him into a martyr.

Those who benefit from Infowars’ bluster would use Facebook’s removal of its Page as evidence that it’s massively biased against conservatives. They’d push their political allies to vindictively regulate Facebook beyond what’s actually necessary. They’d call for people to delete their Facebook accounts and decamp to some other network that’s much more of a filter bubble than what some consider Facebook to already be. That would further divide the country and the world.

When someone has a terrible, contagious disease, we don’t execute them. We quarantine them. That’s what should happen here. The exception should be for posts that cause physical harm offline. That will require tough judgement calls, but knowing inciting mob violence for example should not be tolerated. Some of Infowars posts, such as those about Pizzagate that led to a shooting, might qualify for deletion by that standard.

Facebook is already trying to grapple with this after rumors and fake news spread through forwarded WhatsApp messages have led to crowds lynching people in India and attacks in Myanmar. Peer-to-peer chat lacks the same centralized actors to ban, though WhatsApp is now at least marking messages as forwarded, and it will need to do more. But for less threatening yet still blatantly false news, quarantining may be sufficient. This also leaves room for counterspeech, where disagreeing commenters can refute posts or share their own rebuttals.

Few people regularly visit the Facebook Pages they follow. They wait for the content to come to them through the News Feed posts of the Page, and their friends. Eliminating that virality vector would severely limit this fake news’ ability to spread without requiring the posts or Pages to be deleted, or the links to be rendered unopenable.

If Facebook wants to uphold a base level of free speech, it may be prudent to let the liars have their voice. However, Facebook is under no obligation to amplify that speech, and the fakers have no entitlement for their speech to be amplified.

Image Credit: Getty – Tom Williams/CQ Roll Call, Flickr Sean P. Anderson CC

from TechCrunch https://ift.tt/2zBRgLx

No comments:

Post a Comment